Introduction

This guided walkthrough aims to provide step-by-step instructions on how to build and implement an iOS screen share app using the Ant Media Server’s iOS SDK and WebRTC with the help of broadcast extension.

Screen sharing functionality allows users to broadcast their device screens in real-time, enabling various use cases like remote collaboration, live presentations, and interactive streaming.

Through this tutorial, you will leverage the power of the Ant Media Server’s iOS SDK and WebRTC to build an iOS application capable of seamless screen sharing with minimal effort.

By following the outlined steps, you will gain a solid understanding of the necessary configurations and the code snippets required to integrate and utilize the Ant Media Server iOS SDK effectively.

If you are completely new to the Ant Media Server WebRTC iOS SDK, I highly recommend you check our guide 4 Simple Steps to Build WebRTC iOS Apps and Stream Like a Pro. To make things even more accessible, we have also prepared this video tutorial that complements that guide, providing a visual walkthrough and practical demonstration to assist you along the way.

Prerequisites

Before we begin, ensure that you have the following prerequisites in place:

- Xcode is installed on your development machine.

- A basic understanding of iOS development using Swift.

- Familiarity with the Ant Media iOS SDK and its integration process.

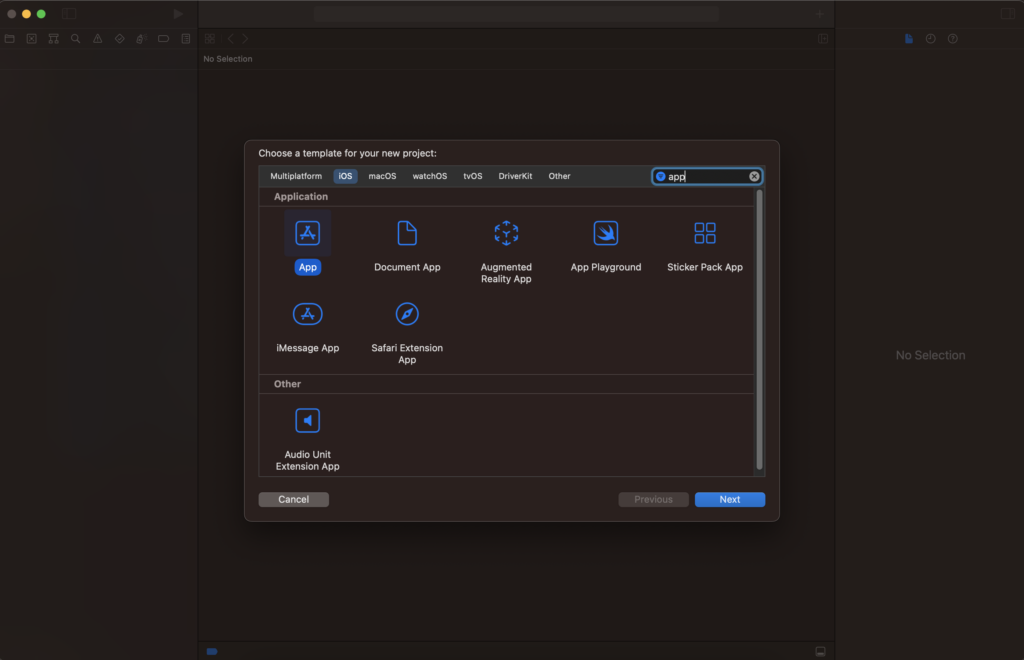

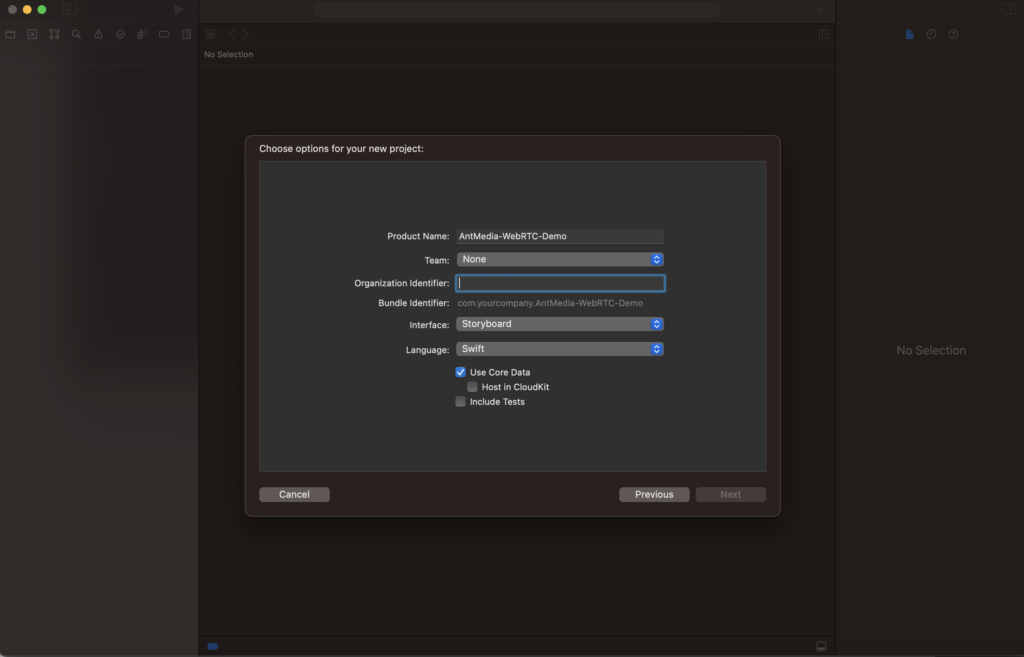

Step 1: Create an iOS Project in Xcode

- Let’s begin by creating a new iOS project in Xcode.

- Set the project name and select your team. Create a project with the Storyboard interface.

- The editor should be ready with all prerequisites selected.

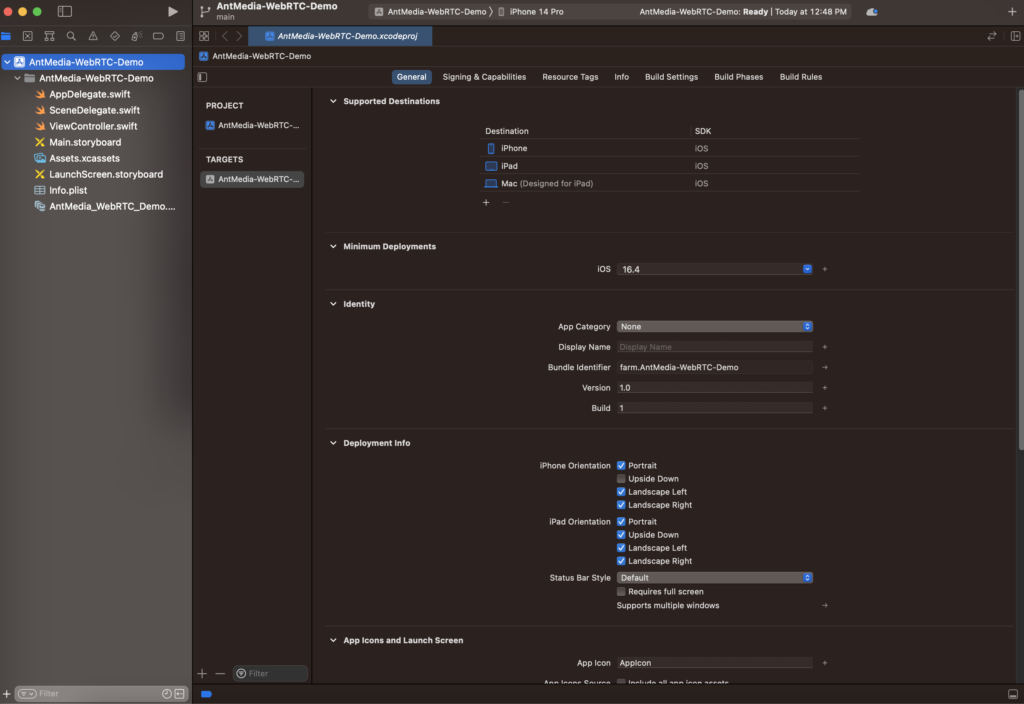

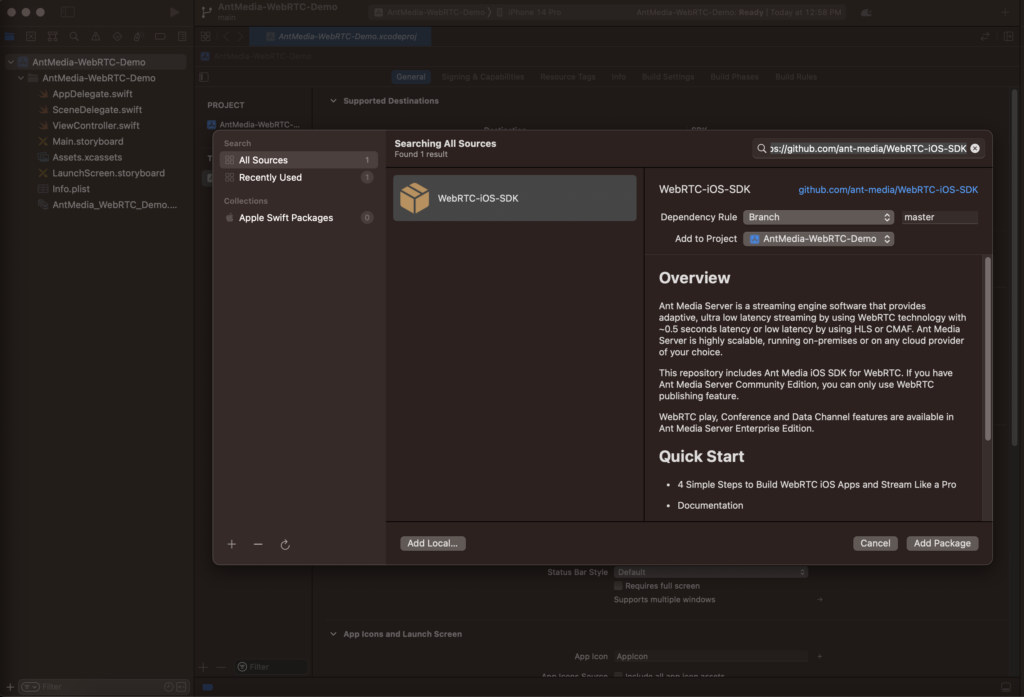

Step 2: Setting up the Project

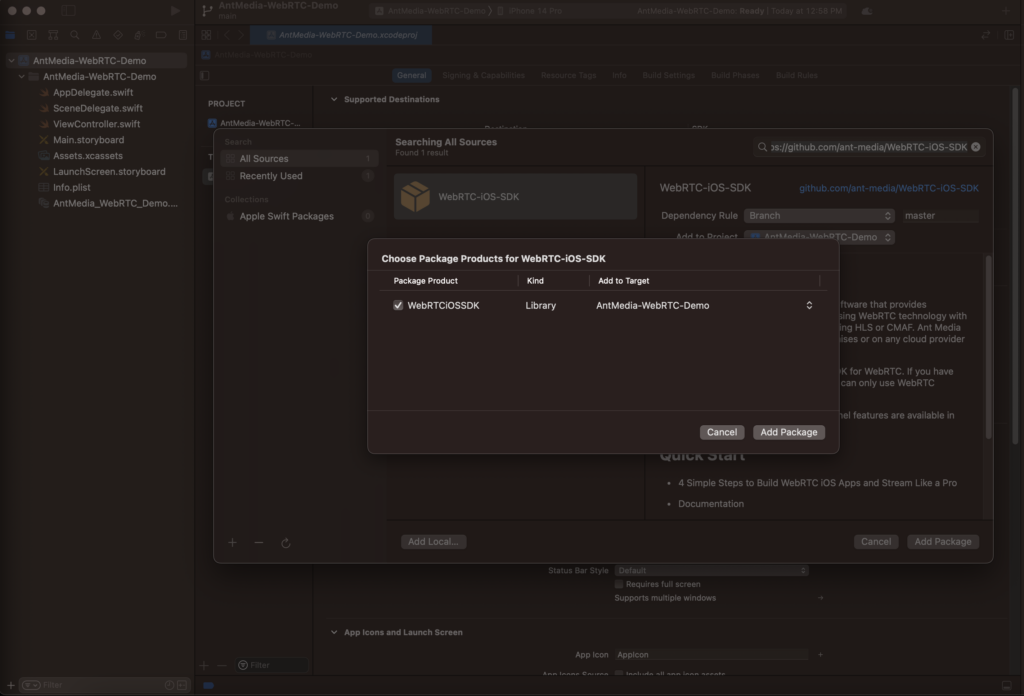

- Add the WEBRTC-IOS-SDK dependency by right-clicking your application and selecting “Add Package” from the context menu.

- Enter the GitHub repository (https://github.com/ant-media/WebRTC-iOS-SDK) into the Package URL box and click the Add Package button.

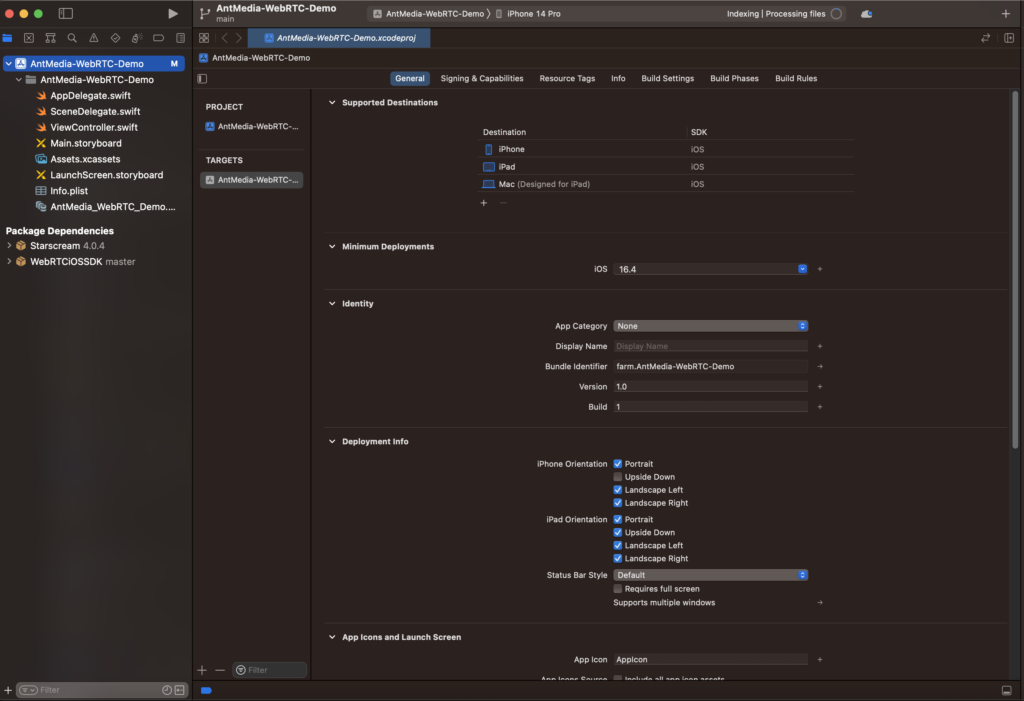

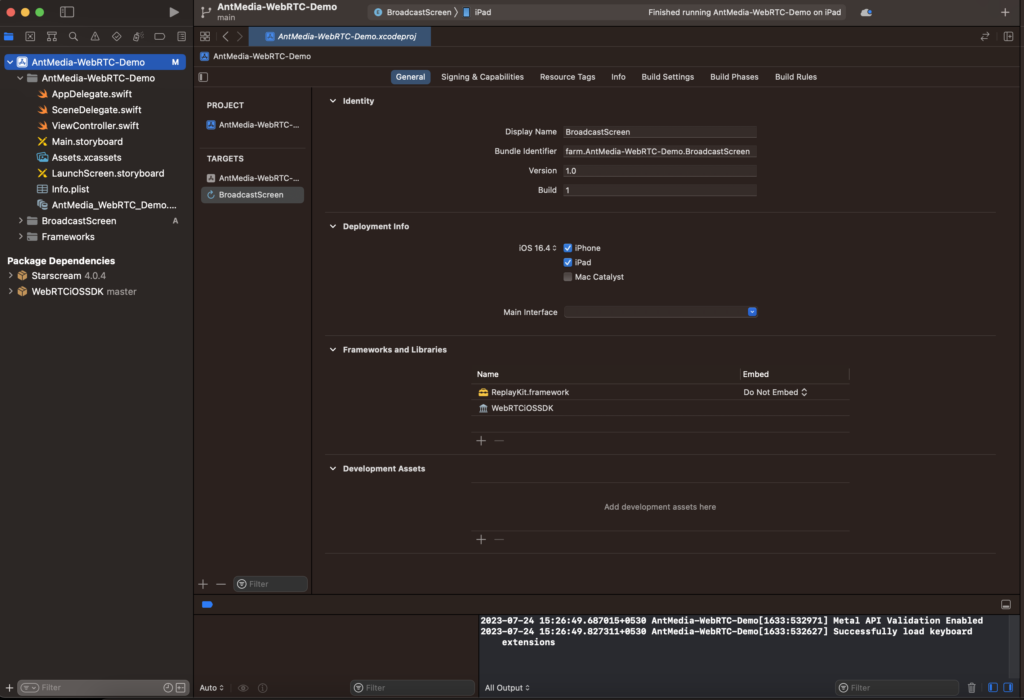

- Add WebRTC iOS SDK Dependency: Click the Add Package button on the incoming screen. The Package Dependencies are listed as shown in the image below

- WebRTC iOS SDK is added to your project dependencies.

Step 3: Implementing Screen Sharing

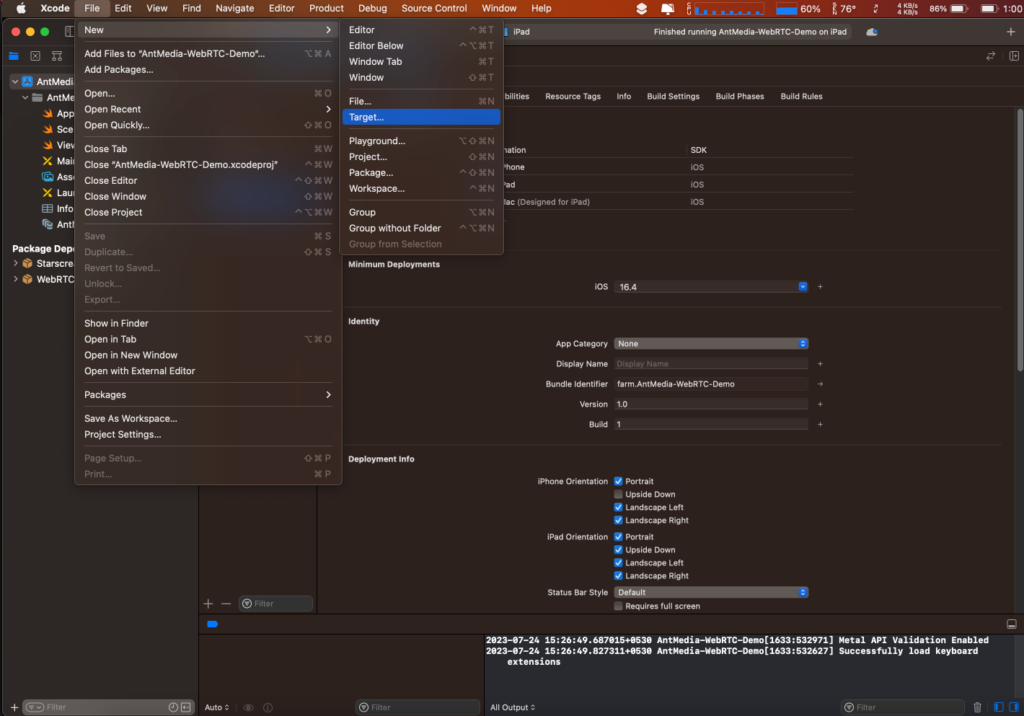

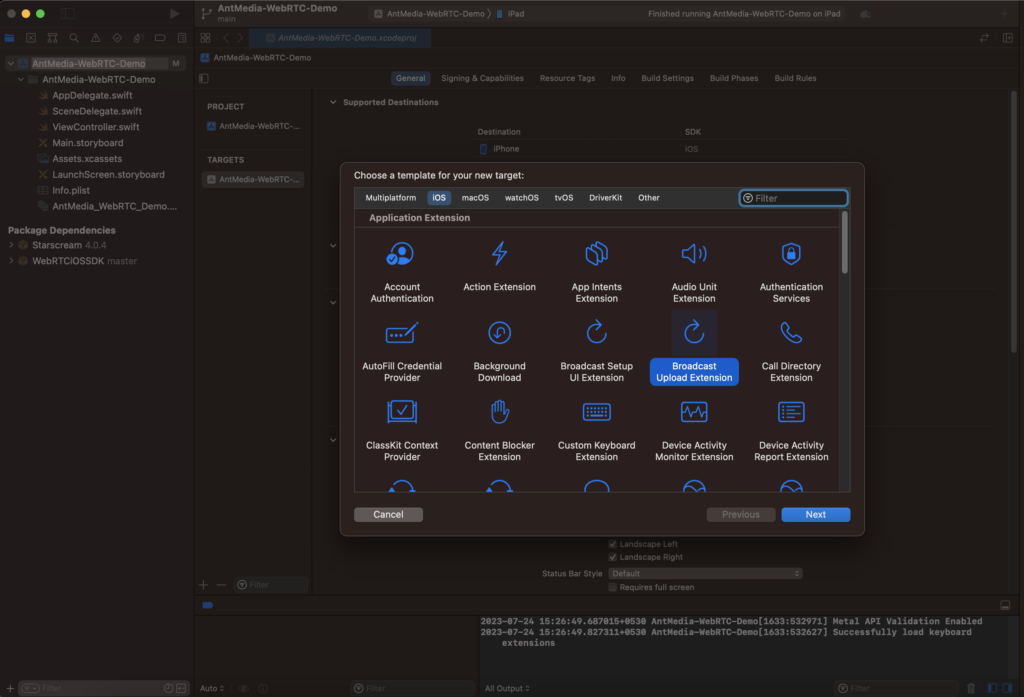

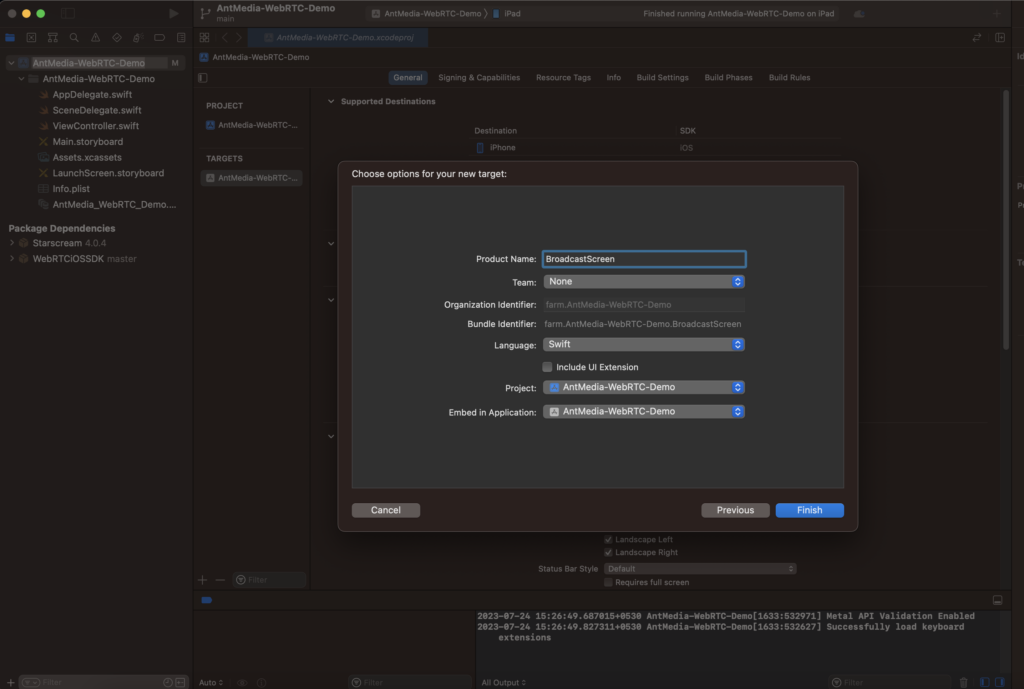

- Add a new Target.

- Choose Broadcast Upload Extension and click Next.

- Name the product and click Next.

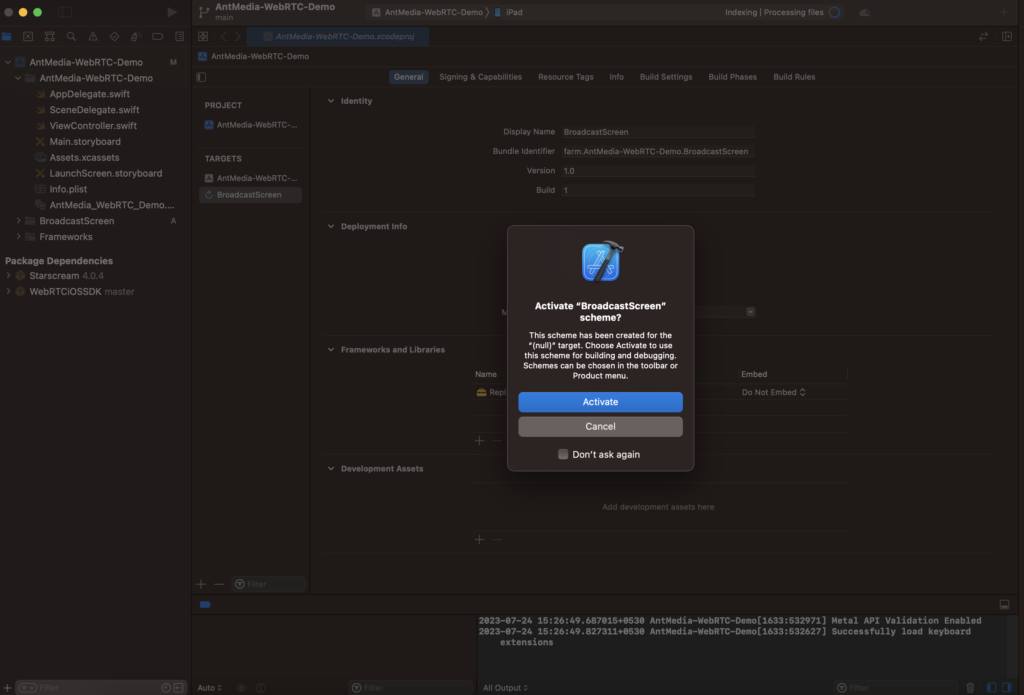

- Activate your extension.

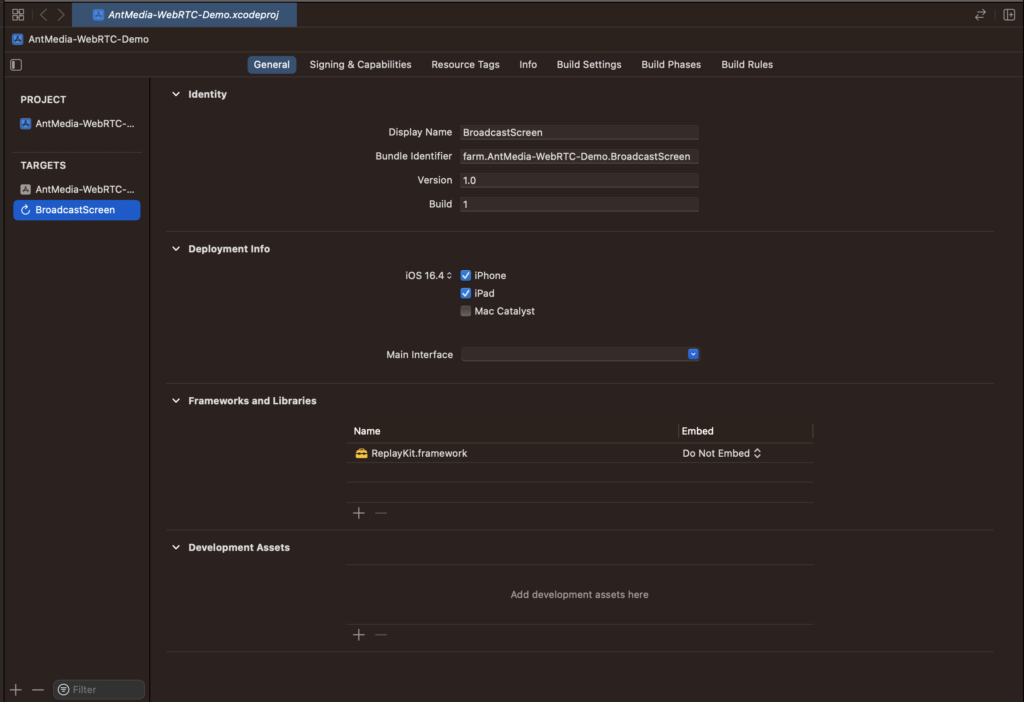

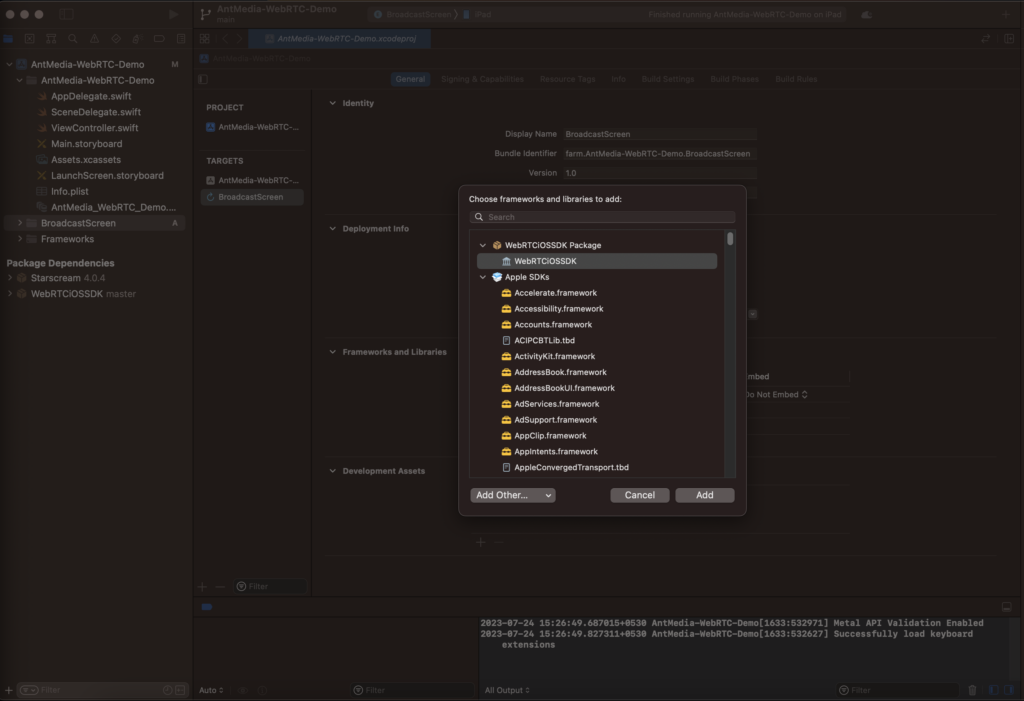

- Click on the plus button under Framework & Libraries to add the WebRTC-IOS-SDK dependency recently imported.

- Select the dependency: WebRTC-IOS-SDK

- Update the version of the XCode Project as needed.

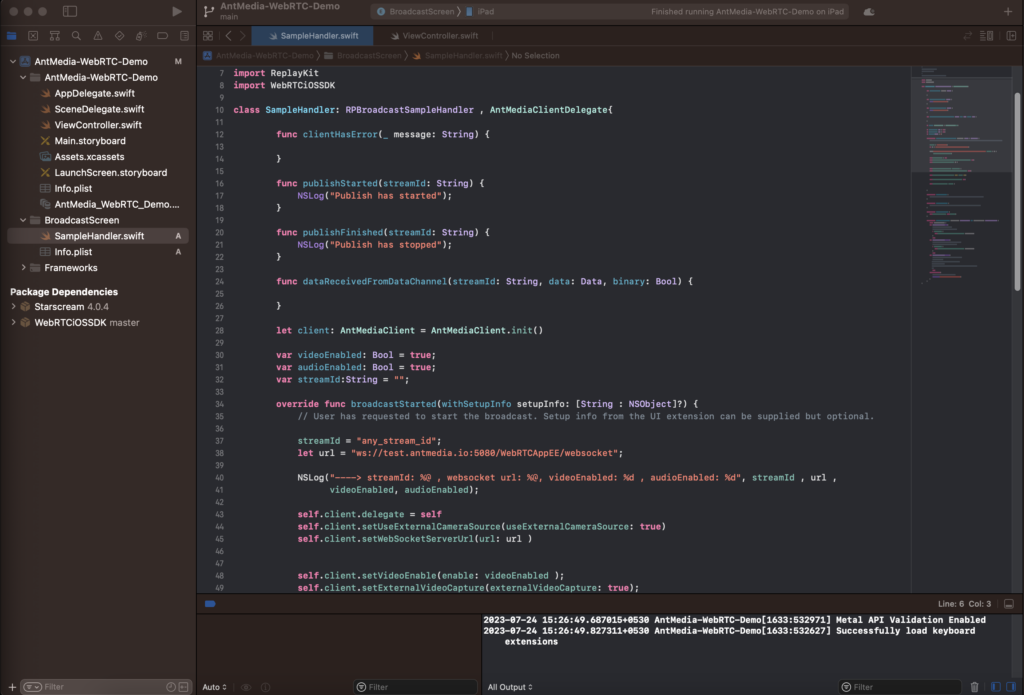

- Open the SampleHandler.swift file under the BroadcastScreen Extension and add the code from the link below https://gist.github.com/mekya/163a90523f7e7796cf97a0b966f2d61b

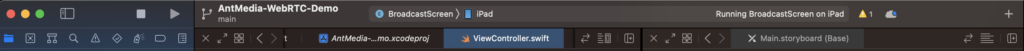

Make sure to set your server URL and a streamId - Optional: You may choose to enable the split window view by clicking on “Add editor to the right.”

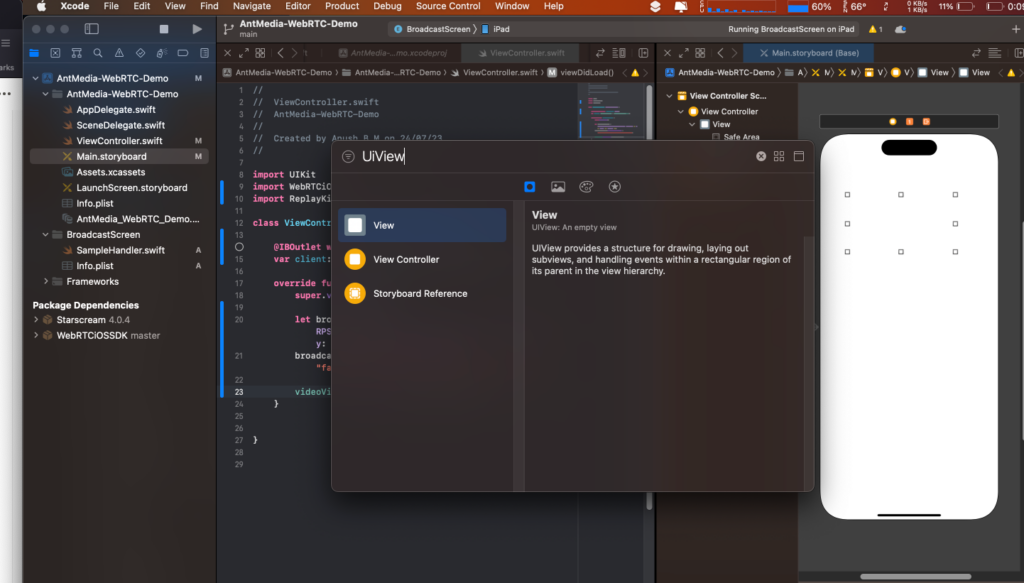

The “Add editor to the right” option is the last button on the 2nd row, as shown in the screenshot below, which allows you to work in a split screen view - Navigate to the Main.storyboard on the left menu. Open the Library by selecting View > Show Library from the menu, and then enter UIView in the search box, as shown in the image below.

Drag the View from the Library and drop it onto the Main.storyboard. Afterwards, adjust the size of the UIView to match the image below.

(Optional) Customize the color and theme as needed. - If you have a split your screen, you should now have the ViewController.swift file loaded on one screen and the main.storyboard loaded on the other screen.

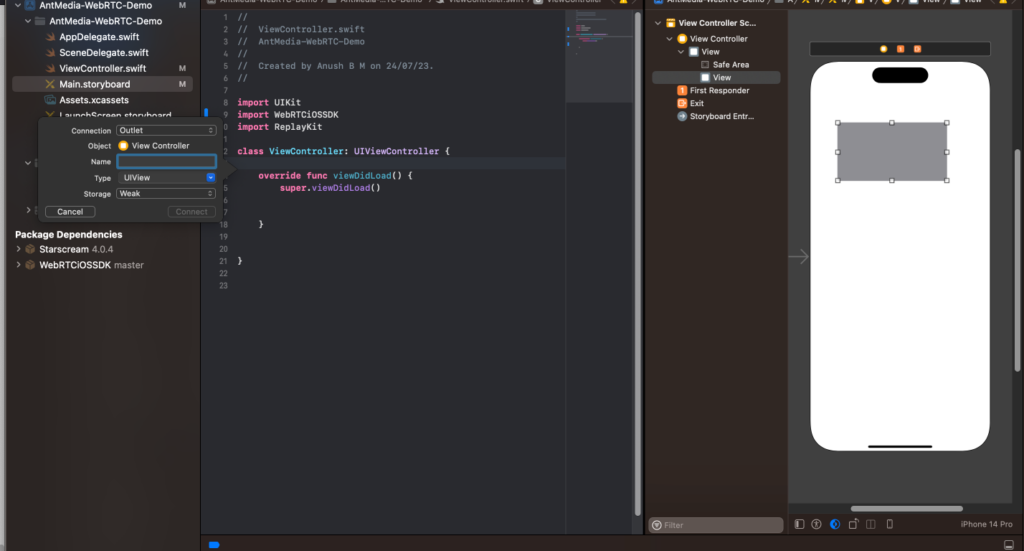

- Right-click on the UIView element in the Main.storyboard, then drag it over to the ViewController.swift file and release the right-click.

This will trigger a popup as shown, allowing you to name your Outlet.- You should already have the ViewController file open in your Xcode project, so now follow these steps to add the RPSystemBroadcastPickerView to the view.

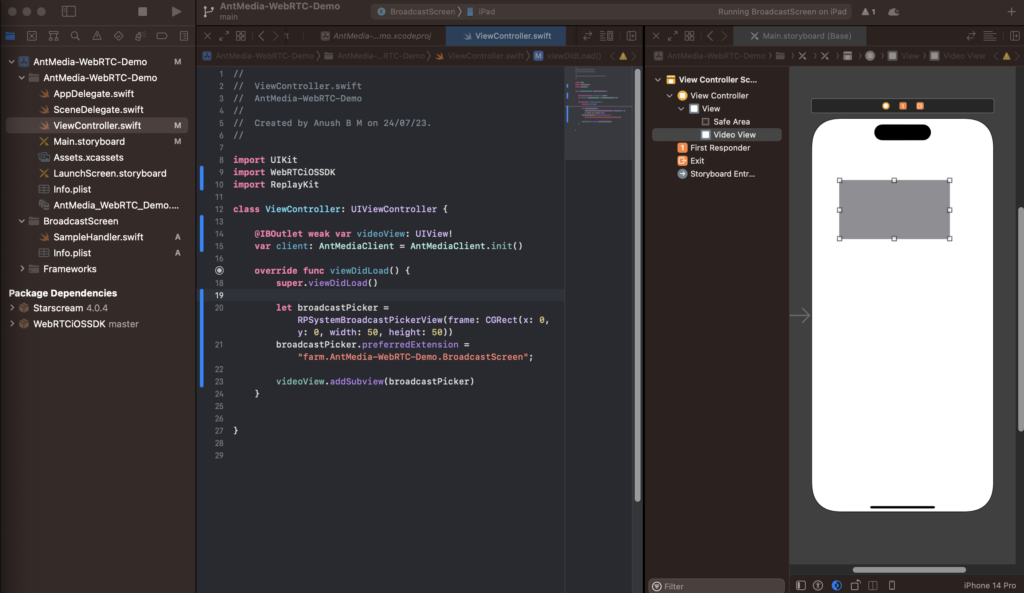

Copy and paste the following code in the ViewController:

- You should already have the ViewController file open in your Xcode project, so now follow these steps to add the RPSystemBroadcastPickerView to the view.

import UIKit

import WebRTCiOSSDK

import ReplayKit

class ViewController: UIViewController {

@IBOutlet weak var videoView: UIView!

var client: AntMediaClient = AntMediaClient.init()

override func viewDidLoad() {

super.viewDidLoad()

let broadcastPicker = RPSystemBroadcastPickerView(frame: CGRect(x: 0, y: 0, width: 50, height: 50))

broadcastPicker.preferredExtension = "<YOUR_BUNDLE_IDENTIFIER>";

videoView.addSubview(broadcastPicker)

}

}

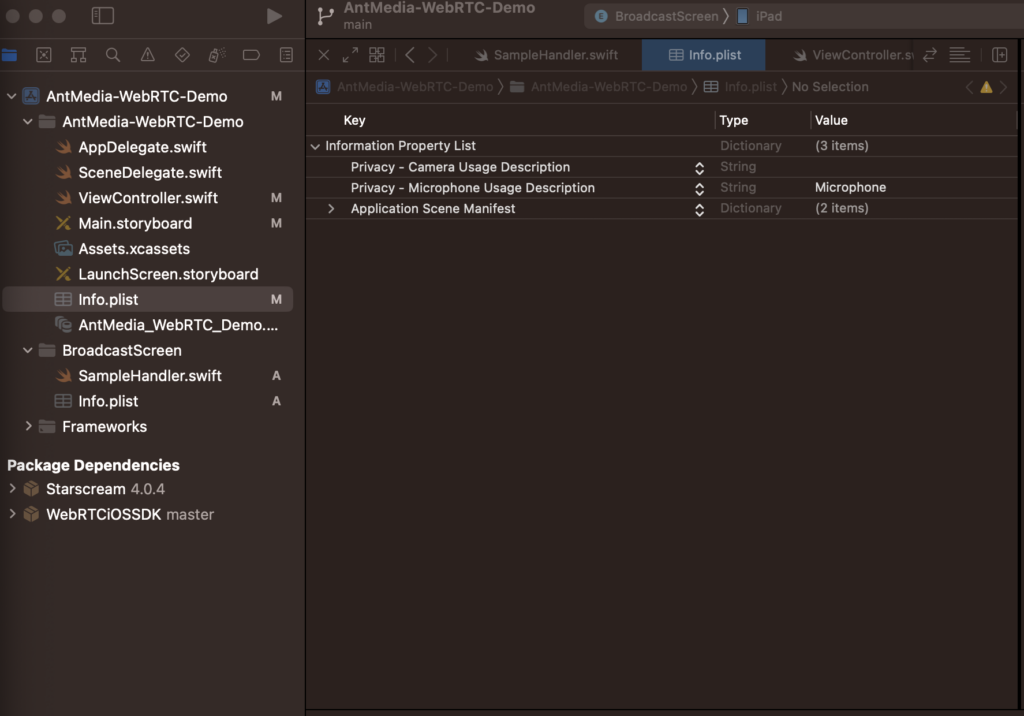

- Let’s add Privacy descriptions to Info.plist. Right-click Info.plist and add Microphone Usage descriptions as below on info.plist

Step 4: Testing and Optimization

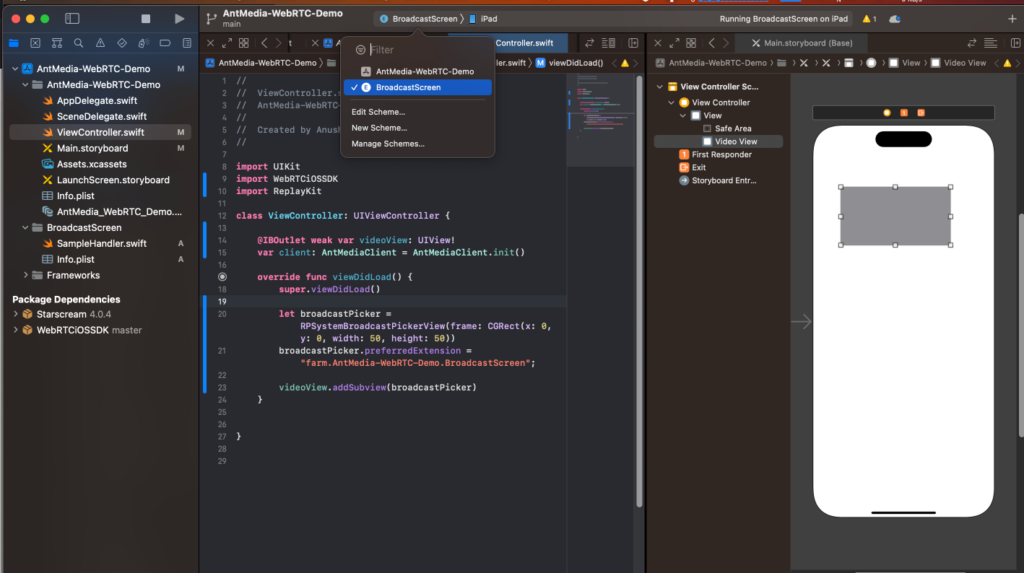

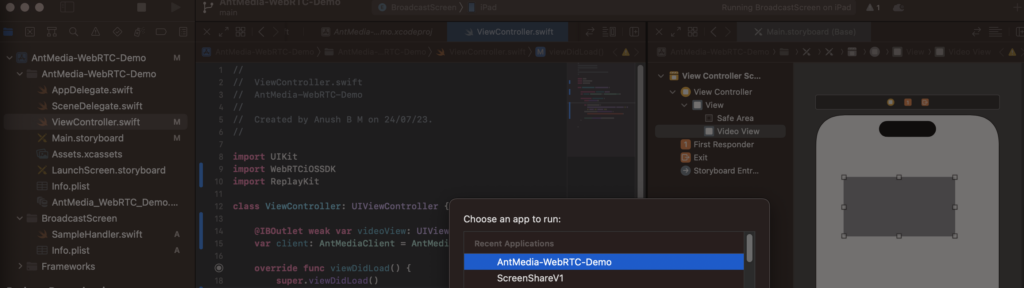

- Choose Broadcast Screen in the Targets.

- Run the BroadcastScreen. Choose the original application and click on Run

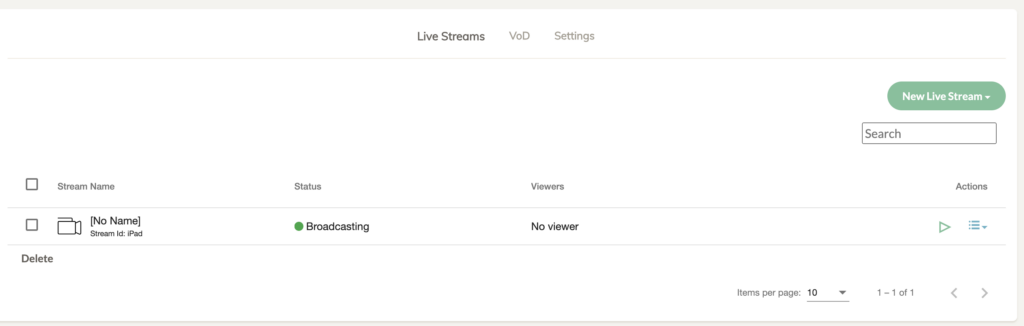

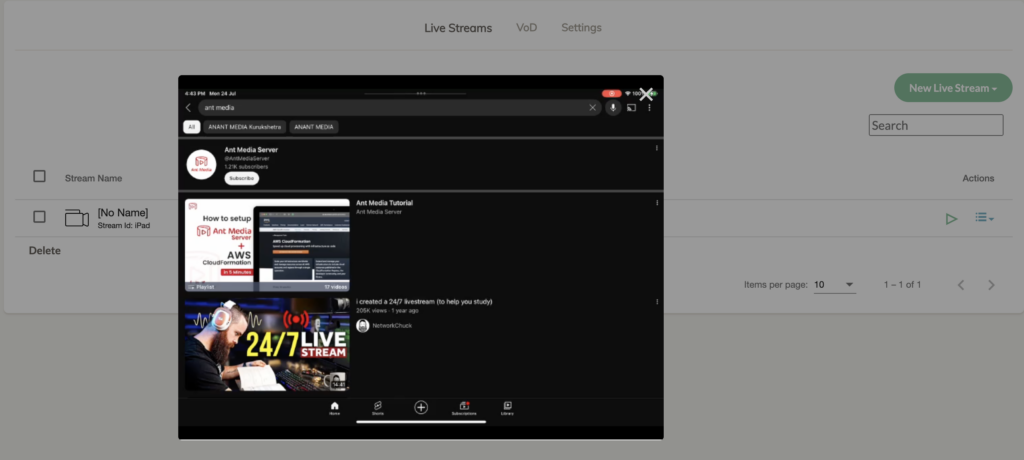

- You are ready to start streaming your screen.

- You should see a live stream on your Ant Media Server.

- You can now stream your device screen with audio.

Conclusion

Congratulations!

You have successfully completed the guided walkthrough for implementing iOS screen sharing with the Ant Media iOS SDK and WebRTC.

By following these steps, you can now seamlessly stream your iOS device screen to a browser and enjoy various collaborative and interactive use cases. This walkthrough has equipped you with the necessary knowledge to integrate the Ant Media iOS SDK effectively and leverage its power for real-time screen sharing.

For more samples, please visit the WebRTC-iOS-SDK repository(https://github.com/ant-media/WebRTC-iOS-SDK) and run the sample WebRTC iOS Application.

Happy streaming!