Learn how you can send and receive video in GStreamer using the Ant Media Server in this step-by-step GStreamer tutorial.

We’ll establish a WebSocket connection to send play or publish requests to Ant Media Server, Signaling (offer / Answer / ICE Candidates), and finally play or publish the stream with GStreamer.

Throughout the guide, all the necessary code is documented, however, you can use the full code sample from this tutorial. The code was tested with Antmedia Enterprise Edition 2.5.1 and Enterprise Edition 2.6.0 along with GStreamer version 1.20.3 on windows 10, macOS(M1) Ventura 13.0.1 and Ubuntu 20.04.

Prerequisites: You should have a basic working knowledge of WebRTC and GStreamer. We will still provide a quick overview of it for newcomers. You can skip it and go straight to the “How to install GStreamer?” section.

Table of Contents

What is GStreamer and what is it used for?

GStreamer is a pipeline-based multimedia framework that links together a wide variety of media processing systems to complete complex workflows. For instance, GStreamer can be used to build a system that reads files in one format, processes them, and exports them in another. The pipeline design serves as a base to create many types of multimedia applications such as streaming media broadcasters, transcoders, video editors and media players.

GStreamer advantages are:

- Free and Open-Source: No charge. Lots of people work together to make it better.

- Multimedia Framework: Think of it as a big playground for your computer where it can play with pictures and sounds. It knows how to take different types of pictures and sounds, change them if needed, and then save them in a new way. It’s like a magical transformer toy for your computer.

- Flexible and Easy to Use.

- Handles All Kinds of Media. GStreamer supports a wide variety of media-handling components, including simple audio playback, audio and video playback, recording, and streaming.

- Works on Different Devices: It is designed to work on a variety of operating systems, e.g. the BSDs, OpenSolaris, Android, macOS, iOS, Windows, OS/400.

How to install GStreamer:

Ubuntu:

Run the following command:

apt-get install libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev libgstreamer-plugins-bad1.0-dev gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-bad gstreamer1.0-plugins-ugly gstreamer1.0-libav gstreamer1.0-doc gstreamer1.0-tools gstreamer1.0-x gstreamer1.0-alsa gstreamer1.0-gl gstreamer1.0-gtk3 gstreamer1.0-qt5 libjson-glib-dev gstreamer1.0-nice gstreamer1.0-pulseaudio

Windows:

There are two installer binaries gstreamer-1.0 and gstreamer-devel. Make sure you install both of them

Download the GStreamer Windows installer

Mac:

There are two installer binaries gstreamer-1.0 and gstreamer-devel make sure you install both of them.

Download the GStreamer Mac installer and export the path to Gstreamer:

export PKG_CONFIG_PATH="/Library/Frameworks/GStreamer.framework/Versions/Current/lib/pkgconfig${PKG_CONFIG_PATH:+:$PKG_CONFIG_PATH}"Building the code

- Clone this AntMedia GStreamer WebRTC repository.

- I have supplied the binaries of the compiled WebSocket libraries for win64_x86 , macos (m1) and linux(x86) in the

libsdirectory. You can compile the WebSocket library by yourself from here if you are using some other operating system or CPU architecture. - Compile the

sendRecvAnt.cfile usinggccby using the following commandgcc sendRecvAnt.c -o sendRecvAnt `pkg-config --cflags --libs gstreamer-1.0 gstreamer-webrtc-1.0 gstreamer-sdp-1.0 json-glib-1.0` ./libs/platform_name_librws.lib

- Run the compiled file

Note: These commands will consume and play a live stream in the WebRTCAppEE Ant Media application. Make sure the stream is publishing when trying to ingest the stream in GStreamer.

Sending a stream:

sendRecvAnt - -ip AMS_IP

Receiving stream:

sendRecvAnt --ip AMS_IP --mode play -i stream1 -i stream2 -i stream3 .......

Command line argument options

Usage:

sendRecvAnt.exe [OPTIONS] - Gstreamer Antmedia WebRTC Publish and Play Help Options: -h, --help Show help options Application Options: -s, --ip ip address of antmedia server -p, --port Antmedia server Port default : 5080 -m, --mode should be true for publish mode and false for play mode default true -i, --streamids you can pass n number of streamid to play like this -i streamid -i streamid ....

Code Explanation

Refer to this AntMedia Gstreamer WebRTC repository for the code. Let’s go over all the important parts of this GStreamer tutorial like connecting to the WebSocket, signaling, and sending and receiving media.

Establishing WebSocket Connection

Ant Media uses Websockets for sharing the signaling information, such as SDP offer and answer and Ice Candidate, so the first task is to establish the WebSocket connection to Ant Media Server.

I am using this WebSocket Client Library for Establishing the WebSocket connection with Ant Media Server, but you can use any other WebSocket library instead.

Websocket connection code :

_socket = rws_socket_create();

g_assert(_socket); rws_socket_set_scheme(_socket, "ws"); rws_socket_set_host(_socket, ws_server_addr); rws_socket_set_path(_socket, "/WebRTCAppEE/websocket"); rws_socket_set_port(_socket, 5080); rws_socket_set_on_disconnected(_socket, &on_socket_disconnected); rws_socket_set_on_received_text(_socket,&on_socket_received_text); rws_socket_set_on_connected(_socket, &on_socket_connected);

Signaling

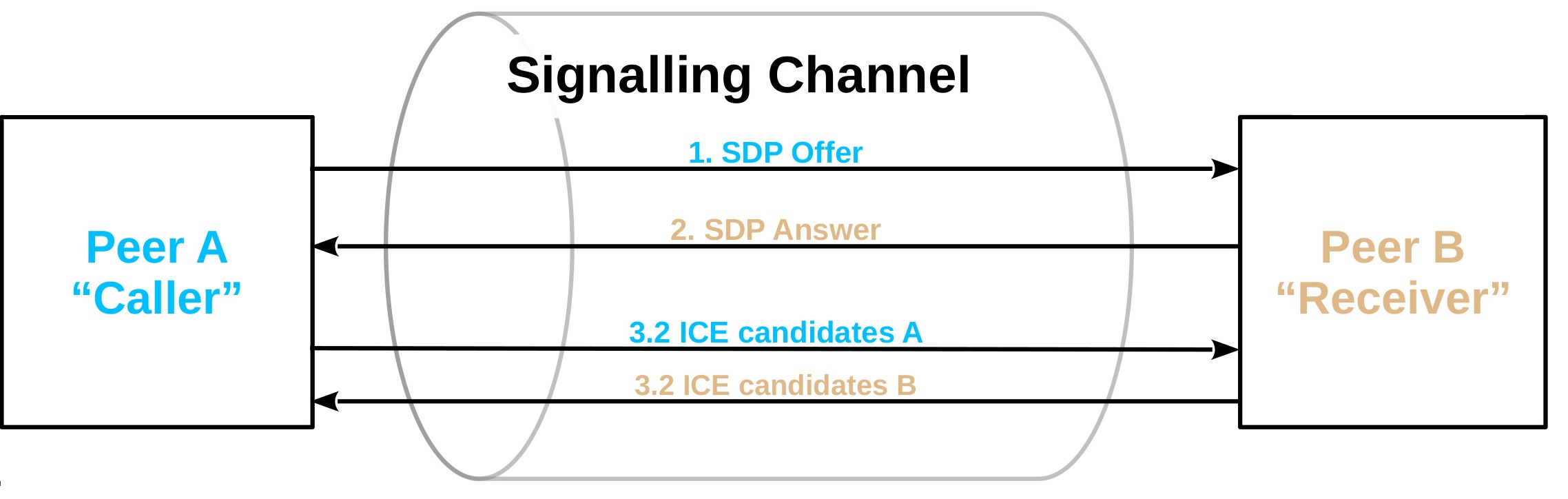

Signaling is the process in which two peers share an SDP offer, Answer, and ICE candidates with each other.

Two WebRTC Peers who want to communicate with each other will have to share the SDP and Ice candidate with each other using any transport mechanism.

WebRTC doesn’t specify a transport mechanism for the signaling information. You can use anything you like, from WebSocket to XMLHttpRequest HTTP requests, QR Codes etc to exchange the signaling information between the two peers.

Ant Media Server uses a WebSocket as a Signaling Channel.

Signaling Publish Requests

These are the steps to play a stream from Ant Media Server in GStreamer

- Send a play request to Ant Media Server through the WebSocket

{"command":"publish","streamId":"stream1","video":true,"audio":true} - The server will send back a start message

- Create a WebRTC element, put the element in a playing state, then create and send the offer to Ant Media Server.

{"command":"takeConfiguration","streamId":"stream1","type":"offer","sdp":"create offer here"} - The server will reply back with an answer SDP from a WebSocket message

{"command":"takeConfiguration","streamId":"stream1","type":"answer","sdp"Server's Answer here"} - set the answer to the WebRTC element.

- The ICE candidate exchanges.

on_incomming_streamcallback is called when we receive any media stream (audio/video)- Now link the stream to an appropriate playing Element and play the stream

Signaling Playing Request

These are the steps to Publish a stream in GStreamer with Ant Media Server.

- Send a Play request To Ant Media Server through the WebSocket

{"command":"play","streamId":"stream1","room":"","trackList":[]} - The server will send a message back through the WebSocket with an OFFER

{"command":"takeConfiguration","streamId":"stream1","type":"offer","sdp":"create offer here"} - Now the WebRTC element will set the received offer

- Generate an Answer SDP and send it to Ant Media Server.

{"command":"takeConfiguration","streamId":"stream1","type":"answer","sdp":"Our Answer here "} - Now the ICE candidate Exchanges.

- The stream will be sent to Ant Media Server.

Note : In both publish / play signaling we will also send and receive ICE candidates using messeges through the WebSocket {"candidate":"Ice Candidate","streamId":"stream1","linkSession":null,"label":0,"id":"0","command":"takeCandidate"}

Sending Play / Publish Request

Now that the Websocket connection is established we can send publish or play request to server so that the signaling process can start.

Play Request:

We can send a publish request to Ant Media Server with a websocket message {“command”:”publish”,”streamId”:”stream1″,”video”:true,”audio”:true}

JsonObject *publish_stream = json_object_new(); json_object_set_string_member(publish_stream, "command","publish"); json_object_set_string_member(publish_stream, "streamId", "stream1"); json_object_set_boolean_member(publish_stream, "video", TRUE); json_object_set_boolean_member(publish_stream, "audio", FALSE); json_string =get_string_from_json_object(publish_stream); rws_socket_send_text(socket, json_string);

when we send this publish request to Ant Media Server , AMS will send us start message with websocket which will be received in on_socket_received_text callback

{“streamId”:”stream1″,”command”:”start”}

Then we can create the WebRTC Object in GStreamer and send Offer SDP to Ant Media Server.

creating WebRTC Elements and setting up callbacks

webrtc =gst_element_factory_make("webrtcbin", webrtcbin_id);

g_assert(webrtc !=NULL);g_object_set(G_OBJECT(webrtc), "bundle-policy", GST_WEBRTC_BUNDLE_POLICY_MAX_BUNDLE, NULL);

g_object_set(G_OBJECT(webrtc), "stun-server", STUN_SERVER, NULL);

g_signal_connect(webrtc, "on-ice-candidate", G_CALLBACK(send_ice_candidate_message), webrtcbin_id);//called when ice candidates are generated

g_signal_connect(webrtc, "pad-added", G_CALLBACK(on_incoming_stream), NULL);//called when webrtc stream is received.

promise=gst_promise_new_with_change_func((GstPromiseChangeFunc)on_offer_created, (gpointer)to, NULL); //callback when offer is created

g_signal_emit_by_name(webrtc, "create-offer", NULL, promise);//create offer on_offer created callback will be called when the offer is created and we will send the offer to Ant Media Server we will set the local description and send the offer with Websocket

g_signal_emit_by_name(webrtc, "set-local-description", offer, NULL); play_stream =json_object_new(); json_object_set_string_member(play_stream, "command", "takeConfiguration"); json_object_set_string_member(play_stream, "streamId", stream_id); json_object_set_string_member(play_stream, "type", "offer"); json_object_set_string_member(play_stream, "sdp", sdp_string); json_string =get_string_from_json_object(play_stream); rws_socket_send_text(_socket, json_string);

Once the offer is sent to Ant Media Server then AMS will reply back with the Answer SDP in websocket message.Once we receive the answer we can set the remote description

answersdp =gst_webrtc_session_description_new(GST_WEBRTC_SDP_TYPE_ANSWER, sdp); promis[CP_CALCULATED_FIELDS_VAR name=""][CP_CALCULATED_FIELDS_VAR name=""][CP_CALCULATED_FIELDS_VAR name=""][CP_CALCULATED_FIELDS_VAR name=""]e =gst_promise_new(); g_signal_emit_by_name(webrtc, "set-remote-description", answersdp, promise); gst_promise_interrupt(promise); }

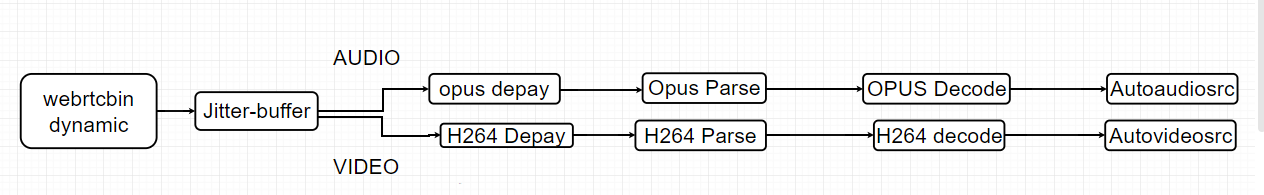

Once this is done WebRTC will try to connect through ICE candidates and If the connection is successful then we will receive audio and video from Ant Media Server in on_incoming_stream callbackthen we can connect the rest of the pipeline to play the received video or audio

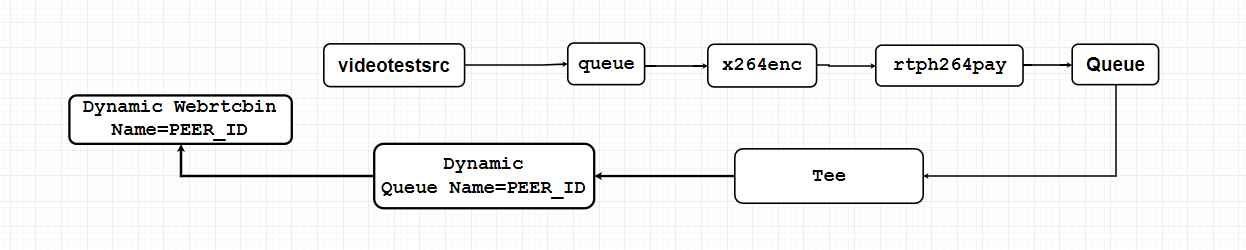

attaching the video stream to WebRTC Element for publising

Play Request:

we can send a Play request to Ant Media Server with a message

{“command”:”play”,”streamId”:”stream1″,”room”:””,”trackList”:[]}

JsonObject *play_stream = json_object_new();

json_object_set_string_member(play_stream, "command", "play");

json_object_set_string_member(play_stream, "streamId", play_streamids[i]);

json_object_set_string_member(play_stream, "room", "");

json_object_set_array_member(play_stream, "trackList", array);

json_string = get_string_from_json_object(play_stream);

rws_socket_send_text(socket, json_string);when we send this Play request to Ant Media Server , AMS will send us a websocket message with the SDP Offer which we will receive in on_socket_received_text callback {“command”:”takeConfiguration”,”streamId”:”stream1″,”type”:”offer”,”sdp”:””}once sdp offer in received we can set the remote description and create the answer and send it back to Ant Media Server.

if (g_strcmp0(type, "offer") == 0)

{

offersdp = gst_webrtc_session_description_new(GST_WEBRTC_SDP_TYPE_OFFER, sdp);

promise = gst_promise_new();

g_signal_emit_by_name(webrtc, "set-remote-description", offersdp, promise);//setting remote discription

gst_promise_interrupt(promise);

gst_promise_unref(promise);

promise = gst_promise_new_with_change_func(on_answer_created, webrtcbin_id, NULL);// This will be called when the answer is created

g_signal_emit_by_name(webrtc, "create-answer", NULL, promise);//creating Answer

}When on_answer_created callback is called we can set the local description and send the answer SDP back to AMS

g_signal_emit_by_name(webrtc, "set-local-description", answer, promise); sdp_answer_json = json_object_new(); json_object_set_string_member(sdp_answer_json, "sdp", sdp_text); json_object_set_string_member(sdp_answer_json, "type", "answer"); json_object_set_string_member(sdp_answer_json, "streamId", (gchar *)webrtcbin_id); json_object_set_string_member(sdp_answer_json, "command", "takeConfiguration"); sdp_text = get_string_from_json_object(sdp_answer_json); rws_socket_send_text(_socket, sdp_text);

attaching the video stream to WebRTC Element for Playing

Once this is done WebRTC will try to connect through ICE candidates and If the connection is successful then it will start streaming video to Ant Media Server. Check out how your live stream is going to look like for viewers with WebRTC sample.

GStreamer tutorial summary

This guide teaches you how to use GStreamer to work with the Ant Media Server, helping you send and receive videos. It’s like a step-by-step guide that walks you through the entire process, making it easier to understand and follow along. Try our 30-days trial today!