Volumetric video is a field of media production technique that captures a three-dimensional space such as a place, person or any object. The increasing popularity of Virtual Reality (VR) and Augmented Reality (AR) technologies has especially triggered the furthering of volumetric video capturing and the streaming systems as a key technology. Spaceport product developed by Ant Media creates an end-to-end solution to capture dynamic scenes and offers a truly three-dimensional viewing experience with 6 Degrees of Freedom. In addition, it supports video playback on VR goggles, web browsers and mobile devices.

Default Setup

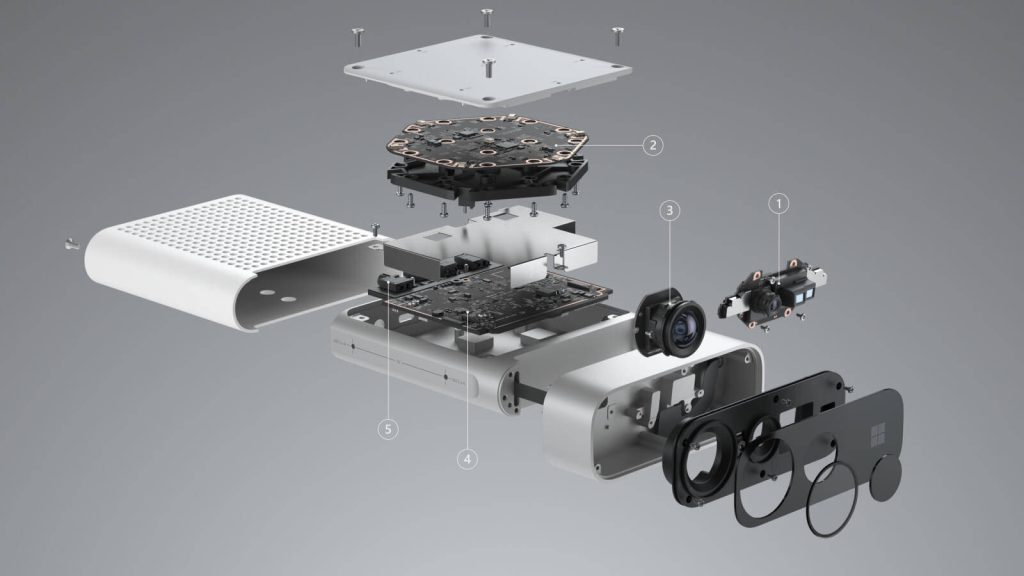

Compared to other existing capture systems, Spaceport is suitable for high-quality, low-cost volumetric capture solution. Our default setup is 6 Azure Kinect cameras covering a reconstruction volume of 1 m in diameter and 2 m high. Each of them equipped with a high-quality 1M pixel TOF Image Sensor so we can record 1024*1024 depth images and 1920×1080 RGB images at 15 frames per second.

Six cameras are distributed in different locations in respect to the scene to create 360 degree point clouds even in various lighting conditions. The ideal distance from six cameras to the center of the recording volume is about 1 to 3 m. The person stands in the middle of the 1 square meter capturing area. The angle between each camera is about 60 degrees, and all cameras point toward the center of the recording volume.

You can check the full hardware specs here.

Volumetric Capture & Registration & Point Cloud Alignment

The alignment part for the 6 Azure Kinect Camera is the most important part of the volumetric capture process. This is because the multi-view alignment is time consuming process and if is not done correctly, most of the time we can not represent all detailed motions of an object.

Spaceport creates a high-quality point cloud for each frame and for streamable, free-viewpoint video, the point clouds from all cameras must be registered perfectly. Spaceport uses advanced Robot Operating System (ROS) algorithms, Point Cloud Library (PCL) and calibration object to estimate the exact transformation matrix. The calibration object is a pattern attached checker board. The person holds the object in his/her hand and all cameras are aligned with reference camera.

Then we focus the retrieving a 3D model of a real object from the input data which is provided by 3D point clouds. We generate a textured simplified mesh from the point cloud in each frame and reconstruct 3D surfaces as triangular meshes.

Volumetric Video Player

Spaceport Volumetric Video Player. It plays frames which have, one .mtl file containing definitions of materials that may be accessed by an OBJ file, and one 3D object containing the compressed meshes of that object.

Spaceport supports getting inputs from variety of input sources, such as 6 DOF controllers via input events and It also supports all headsets (Oculus Rift, Oculus Go, Oculus Quest | HTC Vive). In addition, with the Google Cardboard, Spaceport is compatible with mobile devices.

Spaceport creates a panoramic effect, allowing you to experience more depth and with the customizable user interface. You can make the player look exactly how you want. You can load volumetric videos from your local device, or you can save them to watch later.

Conclusion

We’re at the threshold of a new era and Spaceport enables new possibilities to thinking beyond the flat screen. It’s ready for simultaneously volumetric capture and multi view point cloud alignment with several Azure Kinect Cameras for free-viewpoint video applications. In addition we’re working on a approach to volumetric capture with deep learning. This technique will remove the some constraint that need calibration object.

Hope you enjoyed the blog post.

Write us to discuss cooperation opportunities and learn about Spaceport, also try out the demo.